Few shot learning что это

Few-shot Learning Explained: Examples, Applications, Research

Data powers machine learning solutions. Quality datasets enable training models with the needed detection and classification accuracy, though sometimes the accumulation of sufficient training data that should be fed into the model is a complex challenge. For instance, to create data-intensive apps, human annotators are required to label a huge number of samples which results in complexity of management and high costs for businesses. In addition to that, there is a difficulty of data acquisition related to safety regulations, privacy, or ethical concerns.

When we have a limited dataset including only a finite number of samples per class, few-shot learning may be useful. This model training approach helps make use of small datasets and achieve acceptable levels of accuracy even when the data is fairly scarce.

In this article, I will explain what few-shot learning is, how it works under the hood, and share with you the results of research on how to get optimum results from a few-shot learning model using a relatively simple approach for image classification tasks.

Before moving to technologies and principles under the hood of Few-Shot learning, let’s have some fun.

The left side of the image depicts an alpaca, and the right side is a representation of a llama. These animals are similar, although there are differences. The crucial distinctions are that llamas are bigger and heavier than alpacas; also, they have long snouts, while alpaca’s snouts are flattened. The most readily distinguishable difference is that llamas have long rounded ears that resemble bananas, while alpaca’s ears are short and pretty.

You’ve learned to distinguish the two animals from the Camelidae family. Taking into account the difference in ears, try to guess what animal is depicted in the query image below.

You’ve probably found out that it’s a llama. Four samples are enough for humans to distinguish one animal from another, even though these animals look similar. But will a computer be able to deal with such a task? Can the machine make the appropriate prediction if the class consists of two samples?

The task is more complicated than normal object classification because a lack of samples makes it impossible to train a deep neural network from scratch. That’s where few-shot learning comes in.

What is Few-Shot Learning?

The starting point of machine learning app development is a dataset, and the more data, the better result. Through obtaining a big amount of data, the model becomes more accurate in predictions. However, in the case of few-shot learning (FSL), we require almost the same accuracy with less data. This approach eliminates high model training costs that are needed to collect and label data. Also, by the application of FSL, we obtain low dimensionality in the data and cut the computational costs.

Note that FSL can be mentioned as low-shot learning (LSL) and in some sources this constitutes ML problems where the volume of the training dataset is limited.

Approaches of Few-shot Learning

To tackle few-shot and one-shot machine learning problems, we can apply one of two approaches.

1. Data-level approach

If there is a lack of data to fit the algorithm and to avoid overfitting or underfitting of the model, then additional data is supposed to be added. This algorithm lies at the core of the data-level approach. The external sources of various data contribute to its successful implementation. For instance, solving the image classification task without sufficient labeled elements for different categories requires a classifier. To build a classifier, we may apply the external data sources with similar images, even though these images are unlabeled and integrated in a semi-supervised manner.

To complement the approach, besides external data sources, we can use the technique that attempts to produce novel data samples from the same distribution as the training data. For example, the random noise added to the original images results in generating new data.

Alternatively, new image samples can be synthesized using the generative adversarial networks (GANs) technology. For example, with this technology, new images are produced from different perspectives if there are enough examples available in the training set.

2. Parameter-level approach

The parameter-level approach involves meta-learning — the technique that teaches the model to understand which features are crucial to perform machine learning tasks. This can be achieved by developing a strategy for controlling how the model’s parameter space is exploited.

Limiting the parameter space helps to solve challenges related to overfitting. The basic algorithm generalizes a certain amount of training samples. Another method for enhancing the algorithm is to direct it to the extensive parameter space.

The main task is to teach the algorithm to choose the most optimal path in the parameter space and give targeted predictions. In some sources, this technique is mentioned as meta-learning.

Meta-learning employs a teacher model and a student model. The teacher model sorts out the encapsulation of the parameter space. The student model should become proficient in how to classify the training examples. Output obtained from the teacher model serves as a base for the student’s model training.

Applications of Few-shot Learning

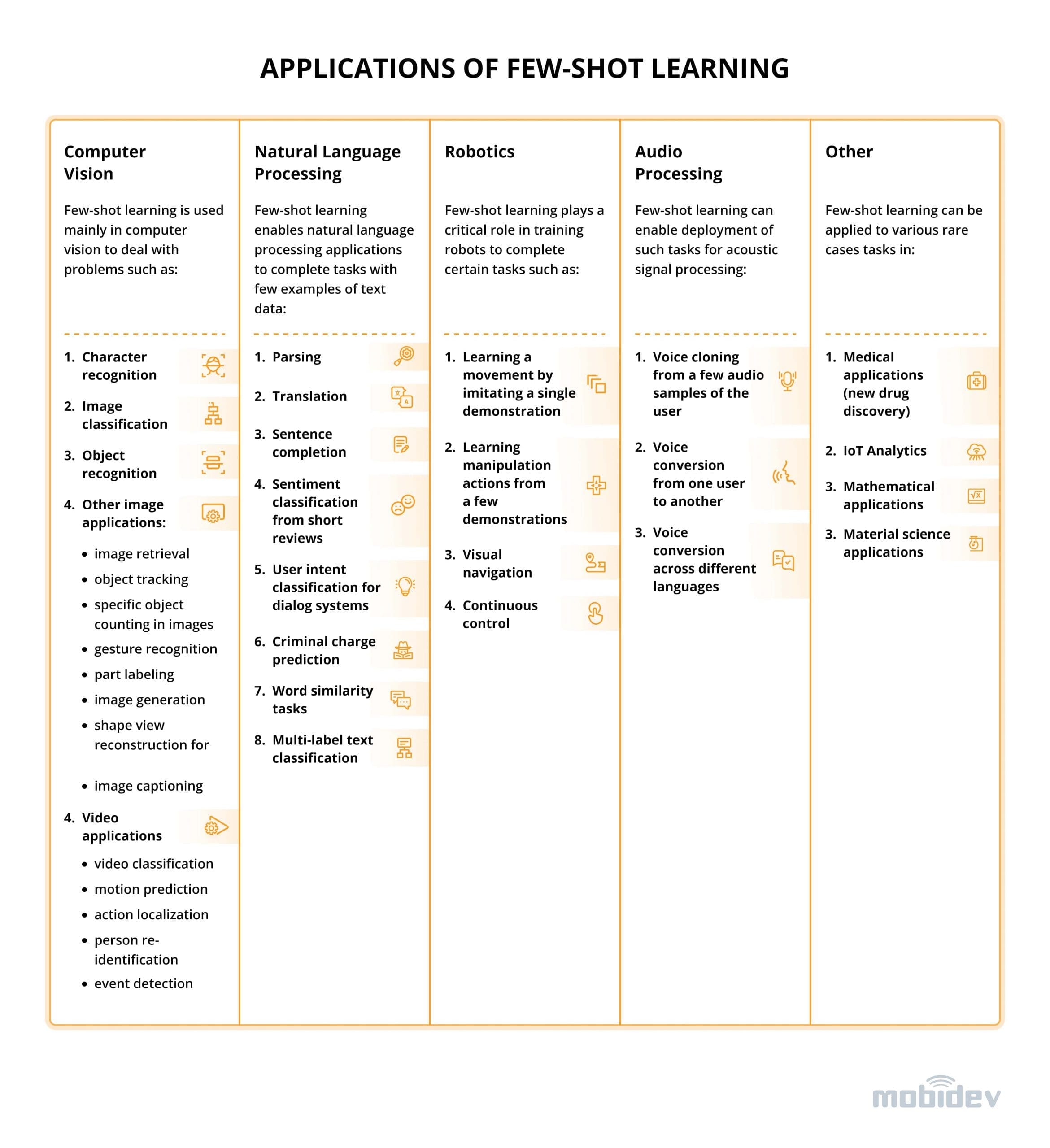

Few-shot learning forms the basic algorithm for applications in the most popular fields (Fig. 1), namely:

In computer vision, FSL performs the tasks of object and character recognition, image and video classification, scene location, etc. The NLP area benefits from the algorithm by applying it to translation, text classification, sentiment analysis, and even criminal charge prediction. A typical case provides for character generation as machines parse and create handwritten characters after a short training on a few examples. Other use cases include object tracking, gesture recognition, image captioning, and visual question answering.

Few-shot learning assists in training robots to imitate movements and navigate. In audio processing, FSL is capable of creating models that clone voice and convert it across various languages and users.

A remarkable example of a few-shot learning application is drug discovery. In this case, the model is being trained to research new molecules and detect useful ones that can be added in new drugs. New molecules that haven’t gone through clinical trials can be toxic or low active, so it’s crucial to train the model using a small number of samples.

Figure 1. Few-shot learning applications

Let’s take a deeper dive into how few-shot learning works and overview the results of MobiDev’s research.

Research: Few-shot Learning For Image Classification

We selected a problem of image classification. A model has to determine the category the image belongs to for the identification of a denomination of a coin. This could be used to quickly count the total sum of coins lying on a table by splitting the larger image into smaller ones containing individual coins and classifying each small image.

Data sets

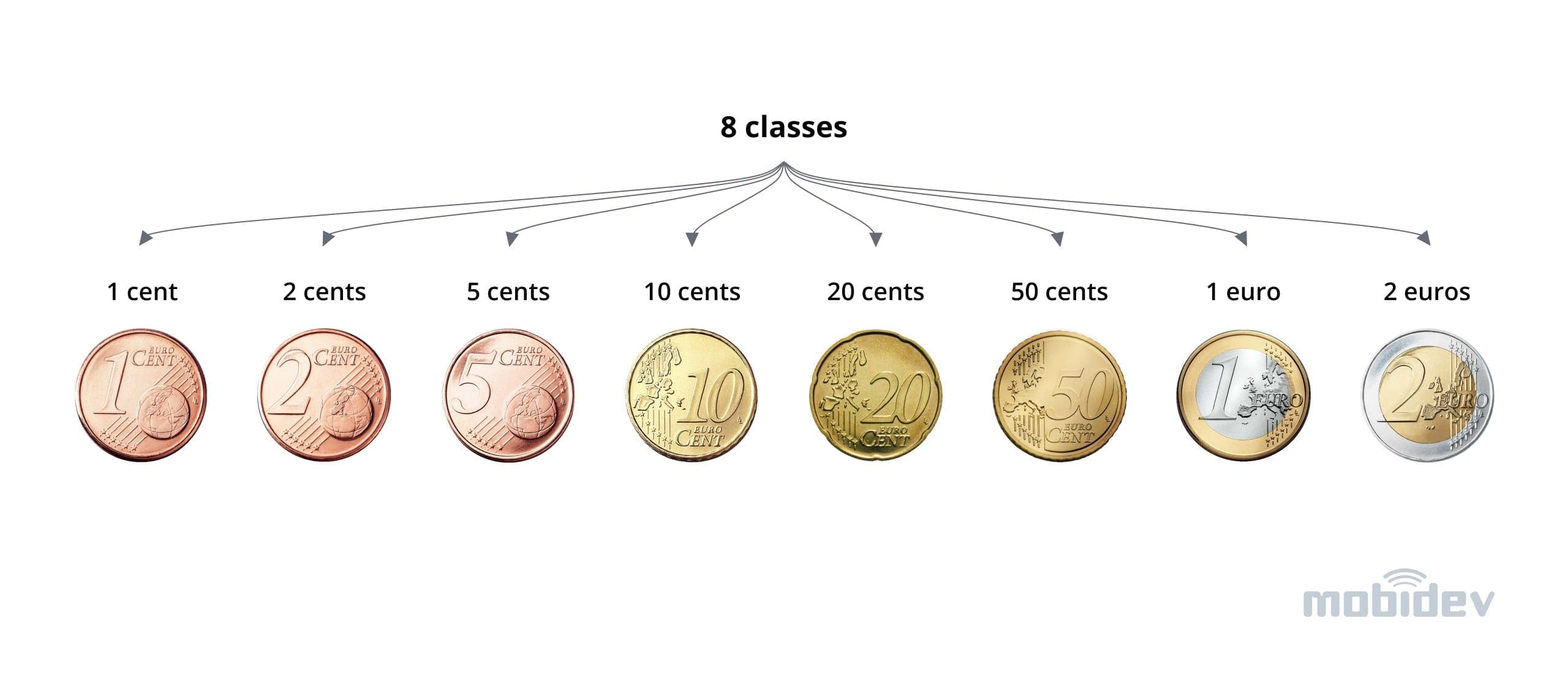

First of all, we needed some few-shot learning data to experiment with. For this purpose, we collected a dataset of euro coin images from public sources with 8 classes according to the number of coin denominations (Fig. 2).

Figure 2. Image classes with examples

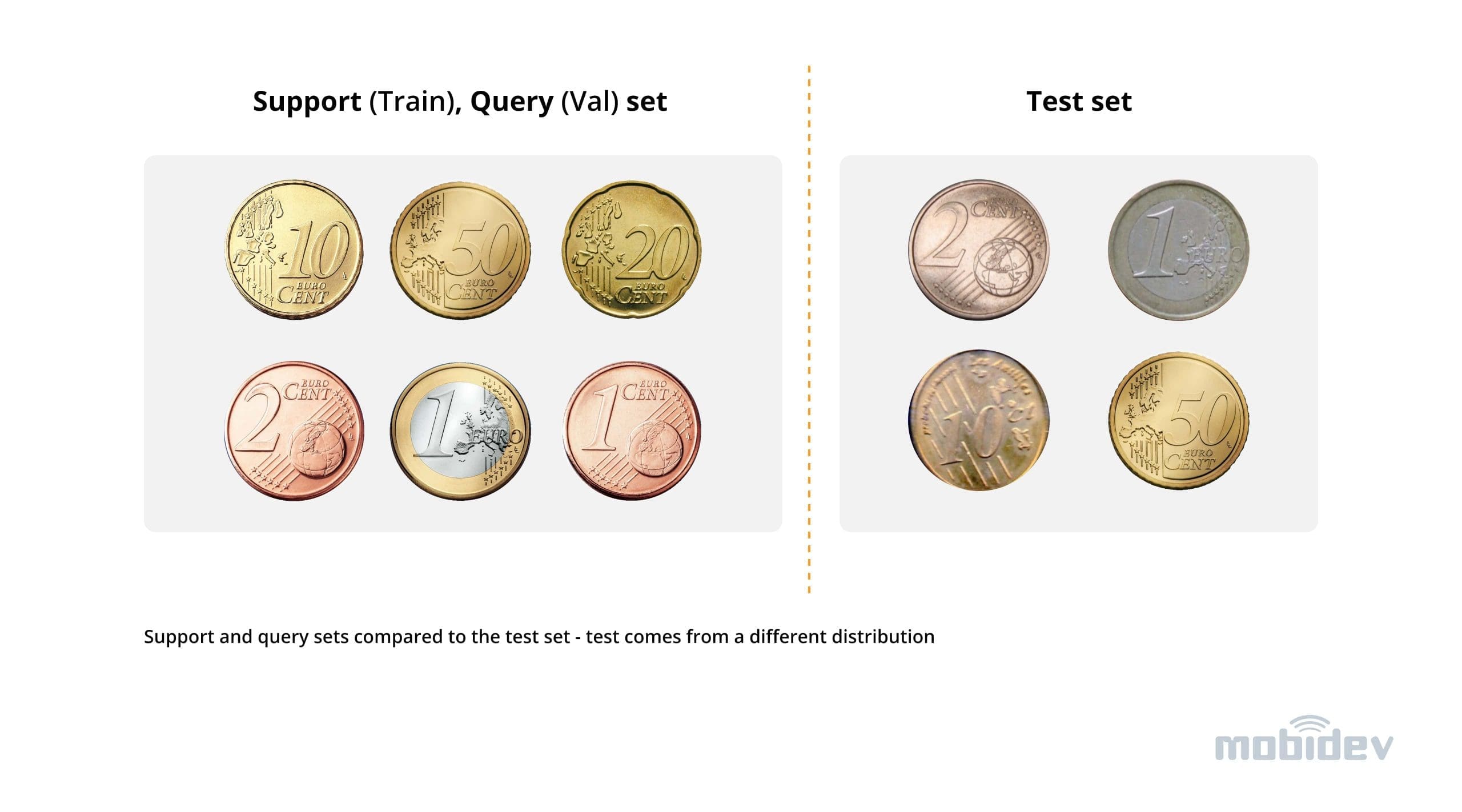

The data was split into 3 subsets – support (train), query (val), and test set (statistics on the datasets can be seen in the Fig. 3). Support and query datasets were selected to be from one distribution whereas the test dataset was purposefully chosen to come from a different one — images in the test are over/underexposed, tilted, show coins with unusual colors, contain blur, etc.

This data preparation was done to simulate the production setting where the model is often trained and validated on good quality data from open datasets / internet scraping, whereas the data from the real operation conditions is usually different: users can capture images with different devices, have poor lighting conditions, and so on. A robust CV solution should be able to tackle these problems to a certain extent, and our goal is to figure out how this will work out for a few-shot learning approach which assumes we do not have much data to begin with.

Figure 3. Support and query sets compared to the test set – test comes from a different distribution

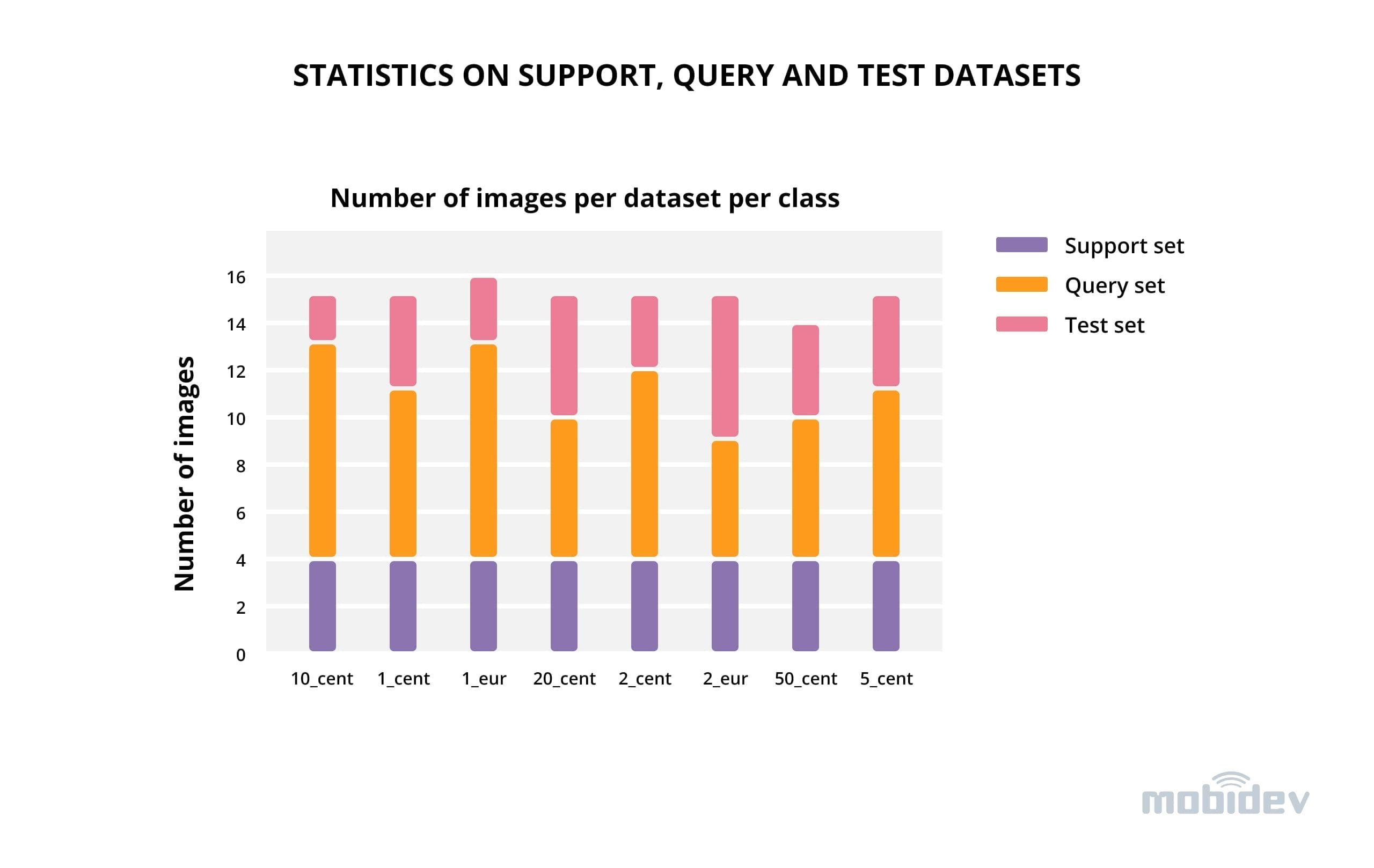

Finally, the statistics on the number of available data samples can be seen in Fig. 4. We managed to collect 121 data samples,

15 images per coin denomination. The support set is balanced, each class has an equal amount of samples with up to 4 images per class for few shot training, while the query and test sets are slightly imbalanced and contain approx. 7 and 4 images per class respectively. The number of samples per set: support — 32, query — 57, test — 31.

Figure 4. Statistics on support, query, and test datasets

Selected approach

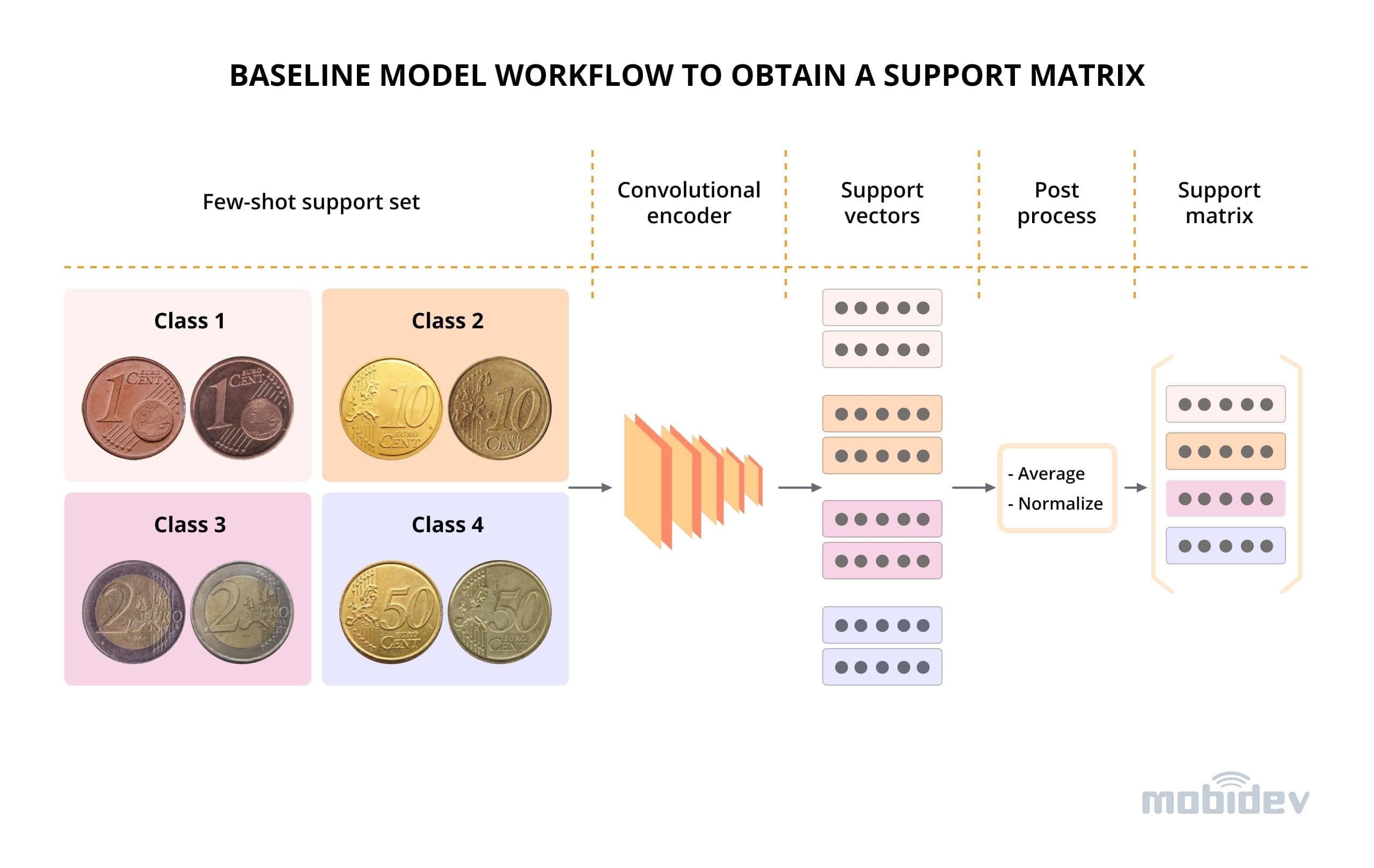

In the selected approach, we use a pre-trained Convolutional Neural Network (CNN) as an encoder to obtain compressed image representations. The model is able to produce meaningful image representations that reflect the content of the images since it has seen a large dataset and was pre-trained on ImageNet prior to few-shot learning. These image representations can then be used in few-shot learning and classification.

Figure 5. Baseline model workflow to obtain a support matrix

Multiple steps that can be taken to improve the performance of a few-shot learning algorithm:

To learn more about each step, download the full PDF version of the research below.

Research: Few-shot Learning For Image Classification

By submitting your email address you consent to our Privacy Policy and agree to receive information regarding our news and business offers. You can withdraw your consent at any time by sending a request to info@mobidev.biz.

The url to download PDF file was sent to your email

The Experiment Results

With the selected model configuration, we ran several experiments on the collected data. First of all, we used a baseline model without any fine-tuning or additional regularization. Even in this default setting the results were surprisingly decent as illustrated in Tab. 1: 0.66 / 0.62 weighted F1 score for query / test sets. We chose a weighted F1 metric as it takes into account imbalance in class sizes as well as combining both precision and recall scores.

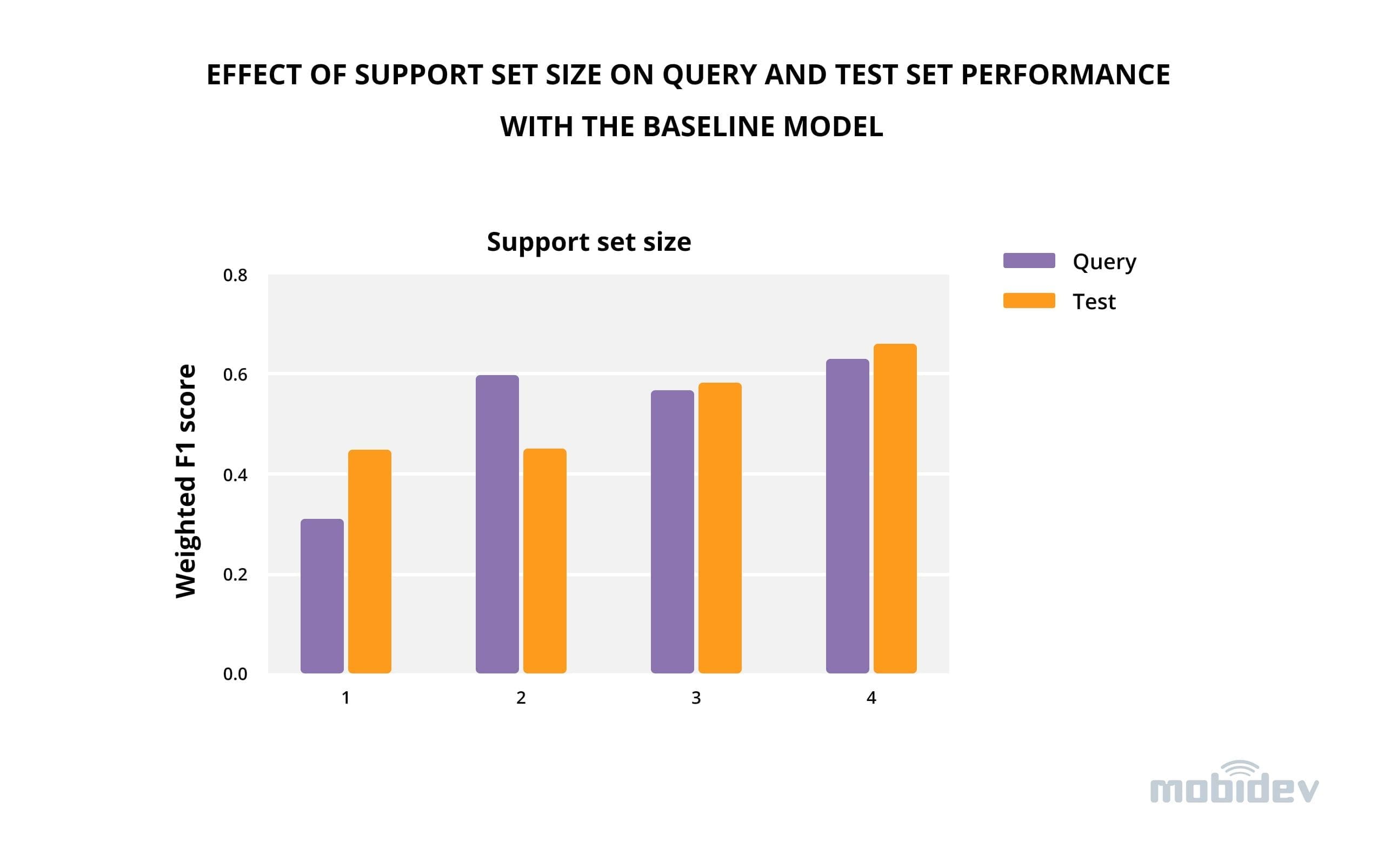

At this stage, we experimented with the size of the support set. As expected, larger support set results in better query and test performance, although the benefits of using more samples reduce as the train set size increases (Fig. 6).

Interestingly, with small support set sizes (1-3) there are random shifts in query vs test set performances (one can improve while the other decreases), however, at support set size 4, there is an improvement for both of the sets.

Figure 6. Effect of support set size on query and test set performance with the baseline model

Moving on from the baseline model, fine-tuning with the application of Adam optimizer on mini-batches from the support set improves the scores by 2% for both query and test sets. This result is further enhanced by 1-4 % when adding entropy regularization on the query set into the fine-tuning pipeline.

It is important to point out that accuracy improves alongside the weighted F1 score, meaning that the model performs better not only class-wise but also in relation to the total amount of data. The final accuracy of 0.7 and 0.68 for query and test sets means the model that had only 4 image examples for training could successfully guess the class of the image in 40/57 examples for query and 21/31 examples for test set.

Table 1. Few-shot metrics on val/test datasets

| Run settings | Accuracy | Weighted F1 score | ||

|---|---|---|---|---|

| Query set | Test set | Query set | Test set | |

| Baseline | 0.67 | 0.61 | 0.66 | 0.62 |

| Baseline + fine tuning | 0.68 | 0.65 | 0.68 | 0.64 |

| Baseline + fine tuning + regularization | 0.7 | 0.68 | 0.69 | 0.69 |

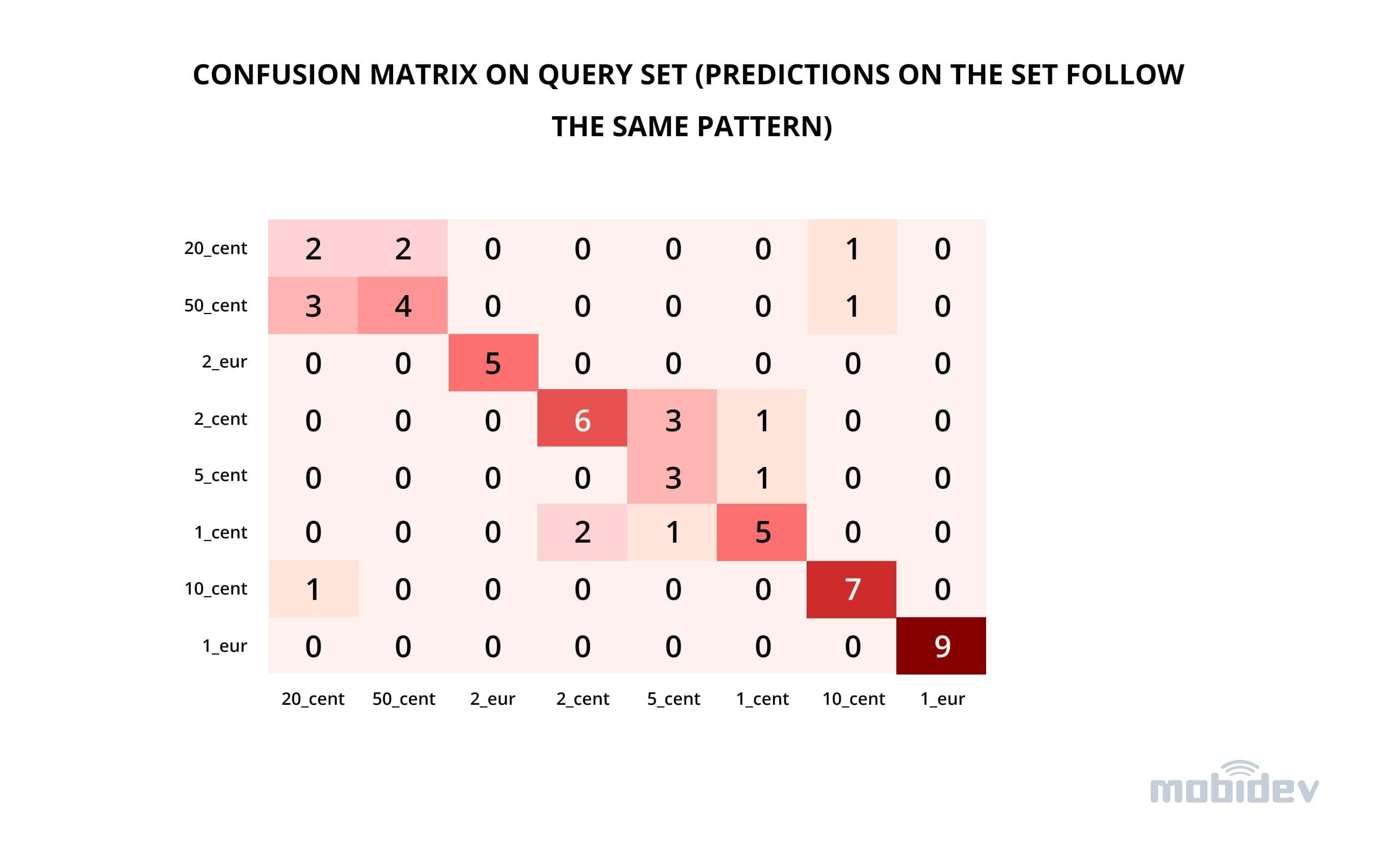

Looking at the confusion matrix on the query set (Fig. 7), we can spot some interesting tendencies. Firstly, the easiest for the model categories to guess were large nominal coins 1 and 2 euro because these coins are made of two parts: inner and outer. Two parts immediately make them distinctive from small nominal coins.

In addition, in 1 euro the inner part is made of grey copper-nickel alloy, while the outer part is composed of golden nickel-brass alloy. In 2 euro coins, the alloys are reversed: copper-nickel for the outer part and nickel-brass for the inner part. This peculiarity makes it easier to differentiate one coin from another.

Two difficult to figure out combinations were 20 vs 50 cents and 5 vs 2 cents, as the model often confused these coins between each other. This was because of little difference between these categories: the color, the shape of the coins, and even the textual inscriptions are the same. The only distinction is the actual denomination inscription of the coin. Since the model was not specifically trained on coins, it does not know which areas of the coins it should be paying the most attention to and therefore can undervalue the importance of denominal value inscriptions.

Figure 7. Confusion matrix on query set (predictions on the set follow the same pattern)

Wrapping Up

In a demanding world of practical Machine Learning, there is always a balance between the accuracy of the developed solutions and the amount of work and data the solution requires. Sometimes, it is preferable to quickly create a solution that meets only minimum accuracy requirements, deploy it in production, and iterate from there. In this scenario, the approach described in this article could work well.

We found out that for a problem of a few-shot classification of coins by image, it is possible to reach

70% accuracy given as few as 4 image examples per coin denomination.

Understanding few-shot learning in machine learning

Machine learning has experienced tremendous growth in recent years. Some of the factors fuelling this wonderful growth include increase in the sophistication of algorithms and learning models, the growing computing capability of machines, and availability of big data.

AndreyBu, who has more than five years of machine learning experience and currently teaches people his skills, says that “data is the life-blood of training machine learning models that ensure their success.” “A learning model fed with sufficient, quality data is likely to yield results that are more accurate,” he adds.

However, sometimes accruing enough data to increase the accuracy of the models is unrealistic and difficult to achieve. For example, in enormous business situations, labeling samples becomes costly and difficult to manage.

In such limited-data and challenging scenarios, few-shot learning algorithms have been employed successfully to discover patterns in data and make beneficial predictions.

What is few-shot learning?

As the name implies, few-shot learning refers to the practice of feeding a learning model with a very small amount of training data, contrary to the normal practice of using a large amount of data.

This technique is mostly utilized in the field of computer vision, where employing an object categorization model still gives appropriate results even without having several training samples.

For example, if we have a problem of categorizing bird species from photos, some rare species of birds may lack enough pictures to be used in the training images.

Consequently, if we have a classifier for bird images, with the insufficient amount of the dataset, we’ll treat it as a few-shot or low-shot machine learning problem.

If we have only one image of a bird, this would be a one-shot machine learning problem. In extreme cases, where we do not have every class label in the training, and we end up with 0 training samples in some categories, it would be a zero-shot machine learning problem.

You will benefit from learning Tensorflow since it is one of the machine learning libraries that demands more effort while learning the TensorFlow Python framework.

Motivations for few-shot machine learning

Low-shot learning deep learning is based on the concept that reliable algorithms can be created to make predictions from minimalist datasets.

Here are some situations that are driving their increased adoption:

Low-shot learning approaches

Generally, two main approaches are usually used to solve few-shot or one-shot machine learning problems.

Here are the two main approaches:

a) Data-level approach

This approach is based on the concept that whenever there is insufficient data to fit the parameters of the algorithm and avoid underfitting or overfitting the data, then more data should be added.

A common technique used to realize this is to tap into an extensive collection of external data sources. For example, if the intention is to create a classifier for the species of birds without sufficient labeled elements for each category, it could be necessary to look into other external data sources that have images of birds. In this case, even unlabeled images can be useful, especially if included in a semi-supervised manner.

In addition to utilizing external data sources, another technique for data-based low-shot learning is to produce new data. For example, data augmentation technique can be employed to add random noise to the images of birds.

Alternatively, new image samples can be produced using the generative adversarial networks (GANs) technology. For example, with this technology, new images of birds can be produced from different perspectives if there are enough examples available in the training set.

b) Parameter-level approach

Because of the inadequate availability of data, few-shot learning samples can have high-dimensional spaces that are too extensive. To overcome overfitting issues, the parameter space can be limited.

To solve such machine learning problems, regularization techniques or loss functions are often employed — which can be applied to low-shot problems.

In this case, the algorithm is compelled to generalize the limited number of training samples. Another technique is to enhance the accuracy of the algorithm through directing it to the extensive parameter space.

If any standard optimization algorithm is used, such as the stochastic gradient descent (SDG) it may not give the desired results in a high dimensional space because of the insufficient number of training data.

As such, the algorithm is taught to go for the best route in the parameter space to give optimal prediction results. This technique is typically referred to as meta-learning.

For example, a teacher algorithm can be trained using a big quantity of data on how to encapsulate the parameter space. Thereafter, if the real classifier (student) is trained, the teacher algorithm directs the student on the extensive parameter to realize the best training results.

Few-shot learning in machine learning is proving to be the go-to solution whenever a very small amount of training data is available. The technique is useful in overcoming data scarcity challenges and reducing costs.

What’s your experience with low-shot learning in machine learning?

Please let us know in the comment section below.

Few-shot-learning и другие страшные слова в классификации текстов

как мы ни колотились отличить.”

Краткий экскурс в классификацию текстов

Про основные подходы к классификации текстов в интернете можно найти много информации, в том числе и на тех самых курсах, про которые я уже упоминал. На мой взгляд, из недавних курсов наиболее интересен вот этот.

Тем не менее очень важную роль играет чистка текста при самостоятельном его анализе или анализе результатов работы ваших моделей (что на самом деле важно и в достаточной степени трудоемко, см., например, статью.

Описание задачи и подходов к решению

На этом моменте принято добавлять рисунки с некоторым набором слов в двумерном пространстве, которые, о чудо, оказываются рядом друг с другом по смыслу. Я же приведу один отрывок, где слова заменены на ближайшие по косинусному расстоянию в пространстве Word2Vec эмбеддингов (спасибо этому репозиторию) обученных на классике русской литературы, а вам предлагаю отгадать, откуда отрывок:

А можно взять языковую модель для текстов, а не для слов, например, BERT. По сути она делает то же самое, что мы сделали для текстов про телескопы и звезды, только о мире она не знает ничего, и всю информацию берет из текстов, которые ей дают. А числа проставляет от обратного. Выше я поставил числа, основываясь на каких-то знаниях, а языковая модель расставляет хоть как-то, лишь бы ее не ругали. Поэтому для каждого текста также будут получаться числа, но каждое из них само по себе не интерпретируемо. Более того, сформировано с целью решить конкретную задачу.

Решение

И вот оказывается, что подходов к решению этой задачи может быть несколько. В отличии от Рюрика и Октавиана изначально они могут казаться совершенно разными, но все они приводят в той или иной степени к одинаковому результату.

Если посмотреть литературу по решению такого типа задач, то присутствуют как решения по первому пути, так и по второму. Третий естественно в статьях не освещается.

Если через tSNE перейти в двухмерное пространство, то распределение объектов в нем до дообучения(слева) и после(справа) будет выглядеть следующим образом:

Цветом обозначены реальные классы объектов и видно, что после дообучения классы явно лучше кластеризуются, чем до. Кроме того, выделяются явные отдельные тематики, которые интересно исследовать.

Обобщение задач NLP

Выше я описал как можно решить одну задачу несколькими способами, но в литературе по обработке естественного языка наблюдается и еще одно движение: решение нескольких задач одним способом. Вот, например, авторы статьи предлагают подход CrossFit по созданию языковой модели общего назначения, используя набор данных для целого набора задач. Для этого они делают несколько интересных вещей.

Делят набор данных на “обучение” и “тест”, не только исходя из фактора данных, но и из фактора задачи. Так часть задач они используют для обучения модели, а часть откладывают для тестирования.

Вводят единую нотацию, по которой модель получает на вход текст и на выходе ожидает текст, вне зависимости от того классификация ли это или question answering.

Вводят обобщающую метрику, которая позволяет усреднять изменения метрик качества для разных задач, что необходимо поскольку метрики в разных задач различаются.

В заключении хочется сказать, что за сложными словами могут стоять простые принципы и не нужно их бояться, а, изучив, не надо навешивать на определенные задачи как ярлык, а стараться мыслить шире.